It’s not unusual to turn on a console and be greeted by a screen of pending downloads for games you have installed. It happened to me today. Several games needed updating, meaning hundreds of gigabytes of data to download. This didn’t particularly bother me. I have an uncapped and relatively speedy internet connection. My primary concerns were exactly how long it would take and how much hard-drive space it would require. What effect all that data would have passing through the network didn’t occur to me. I couldn’t see it so I wasn’t thinking about it.

Do you have any idea how much internet data you use a month? I found this surprisingly hard to find. In a subsection of my TalkTalk account I found a graph charting the last seven days of my internet use, and it told me I used approximately 109GB of data. That seems neither high nor low to me as someone who writes about games and works from home. I could easily increase it.

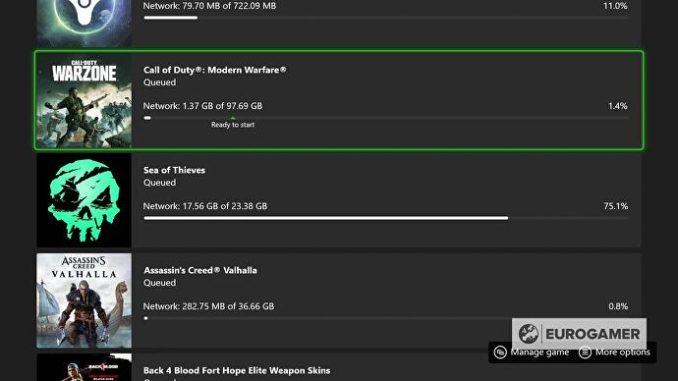

When I turned my PlayStation 5 on recently, I was prompted to download a 60GB Ghost of Tsushima patch, a 55GB Cyberpunk 2077 patch, and a few smaller others. Letting those run would have doubled my use. Or I could have simply downloaded Call of Duty: Warzone, like millions of other people, which is 103GB on its own (not to mention the chunky updates it periodically rolls out), and doubled it that way. The point being: it’s not hard to gobble up lots of data playing games in 2021.

As display resolutions increase, so do game sizes, and we’re now firmly in the realm of 4K and even 8K games with PlayStation 5 and the Xbox Series of consoles. On top of that, the concept of games as a service, as things that live on through multiple seasons of play, adding considerable new content during their lifetimes, has bedded in to become the norm. Doesn’t it seem like a lifetime ago that PlayStation 3 and Xbox 360 made patching commonplace?

Everything is growing. Look at one of the world’s most popular game series, Call of Duty, as an example. IGN charted the rising game-sizes of the series on PC a couple of years ago. This jumps from 8GB in 2007 for Call of Duty 4: Modern Warfare, to ten times that a decade later. It balloons even higher with Call of Duty: Modern Warfare in 2019, to over 200GB, though this is distorted somewhat by Warzone – a whole other game – being included with it. Consider Warzone passed the 100 million-player mark earlier this year, though, and that’s a lot of COD-related data travelling around. (Modern Warfare/Warzone’s file size would eventually be reduced in a patch, interestingly, as would Fortnite’s.)

Perhaps it is no wonder, then, that when audiences of that size clamour for files of that size, simultaneously, it has a noticeable impact on internet use on a national scale. The Covid-19 pandemic and associated lockdowns exacerbated this. UK broadband network operator Openreach – used by BT, Plusnet, Sky, TalkTalk, Vodafone and Zen customers – reported a 40 percent rise in broadband use in 2020, and cited “large updates to PlayStation and Xbox games consoles, including popular gaming titles such as Call of Duty” as one of the reasons why. These updates became such a concern, in fact, that communications regulator Ofcom created a liaison between gaming companies and internet service providers to keep each other updated about incoming game patches and releases. UK games industry trade body UKIE even wrote an open letter advising gaming companies on best practices for releasing downloads during the pandemic, suggesting scheduling downloads for after midnight and avoiding peak times of between 5pm and 11pm. The goal of all this was to keep things spaced out and avoid a network pile-up.

“What we were concerned about,” Ofcom’s Huw Saunders tells Eurogamer, “was to actually ensure that the network operators were firstly aware of what was going to happen in advance, so if they had a specific problem about the day of the week, or the time of day a release was due, they could least talk to the publishers and say, ‘Could you change it by a day or so?’ Or, ‘Could you actually change the time in the day?’ To prevent it coinciding with their anticipated peak period.”

Had Activision Blizzard decided to release Call of Duty: Black Ops Cold War a day later, on 14th November 2020, the same day Amazon live-streamed two Autumn Nations Cup rugby matches – which was largely responsible for the second biggest day of Openreach traffic in 2020 – we could in theory have had a significant network issue.

Traffic patterns have settled a bit this year, because of people going back to the office and being able to go out more, Huw Saunders says, but traffic is still up year on year (he didn’t say by how much). It’s also worth noting that while gaming plays a part, “demand is predominantly driven by video content”, dominating something like 70 to 80 percent of network use, he says.

That liaison between games companies and ISPs is still in place, though. “The publishers do tell the access providers when they’re actually dropping a patch for a new release, and in general terms, there is a process to enable the network operators to push back if they know there’s going to be coincident patches, or whatever, or if there are other issues, and I think that’s broadly working okay.”

To get an idea of how much data flows around the network, consider Openreach’s record day of Boxing Day 2020. On that day, 210 petabytes of data were consumed, which is 210,000 terabytes or 210,000,000 gigabytes, broadly speaking. That’s a lot of data, especially for one day. It’s hard to get a gaming-specific daily equivalent, but Steam – the dominant online PC games marketplace run by Valve – recently shared annual results with equally eye-popping numbers. In 2020, Steam delivered 25.2 exabytes of data. That’s 25,200 petabytes, 25,200,000 terabytes, or 25,200,000,000 gigabytes.

Said Valve in the same report: “Various countries’ government bodies approached us and other large internet companies to see how we could help mitigate the rise in global traffic that ISPs were seeing, because it was getting to a point where it was affecting people’s ability to work from home and their children’s remote schooling. In response, we made some changes to help manage the bandwidth during work and school hours, and to defer updates to the evenings.”

The point of this is to highlight how much gaming data is being pumped around. And just because you cannot see it leave your house and travel along the pipes to a data centre somewhere, doesn’t mean it comes without an associated cost. And the cost, in this case, is power.

Everything in the equation costs power: the machine you turn on to play the game, the TV you turn on to see it, and the modems and routers you connect to the internet with, and through which the information flows until the servers send you the information you need.

Servers are computers that look like large, exposed motherboards, slotted one on top of another into something roughly resembling a cafeteria tray rack, which is coincidentally called a rack. They’re there to literally serve you data when you request it, which means they’re as powerful as possible in order to serve as many people as quickly as possible. As a result of this processing power, they need a lot of fans for cooling, which makes them very loud.

What this leads to are warehouse-like buildings known as data centres (server farms colloquially) that are specifically designed to house them. They are noisy places, blasted by air conditioning, and they’re often close to internet exchanges, for sending and receiving data quickly, and have their own bespoke power solutions, such as substations that plug directly into the National Grid.

Exactly how much power they use is a tricky question to answer, because every company has their own way of doing things. Companies like Google and Microsoft are so big they have their own bespoke data centres and dedicate enormous resources to them. Google has an entire data centre subsite that explores the renewable energy advances it has made in this area, so it clearly wants to brag a bit, and Microsoft recently and successfully experimented with underwater servers. Other smaller companies have to settle for sharing space in server-hosting facilities like Amazon Web Services’, for example.

What makes it even trickier to pin down is that companies with global presences have data centres all over the world. Consider Valve with Steam. In that same report mentioned earlier, Valve noted how it – in one year – upgraded one network site in Chicago, added three new sites in Frankfurt, Dallas and Buenos Aires, and had “at least” another two-to-four planned for the first half of 2021. How many that means the company has around the world, I don’t know, but they won’t all be state of the art.

Another huge thing to consider on top of this is cloud computing, which involves a server not just handing data to you, but performing a computing task on your behalf. This increases demand on the machines in the data centres dramatically, and with it, the heat they create and cooling they need. In other words: more power. There is now Google Stadia, Amazon Luna, PlayStation Now, and Xbox Cloud Gaming (formerly xCloud), which means some of the biggest companies in the world, and not just gaming, are involved.

The question we’re asking, fuelled by the urgency of the recent Intergovernmental Panel on Climate Change (IPCC) report, which showed temperatures rising faster around the world than we expected, is what impact does all of this data centre-powered gaming have on the environment? What does it really cost when you download a game, install an update, or play one via the cloud?

Simple question; not so simple answer

There has been next to no reporting on gaming-related downloads’ climate impact in the mainstream press, and little amongst the specialist press either, for good reason – it’s difficult. Looking at an example of the debate around video streaming – a similar, if still different phenomenon – helps to illustrate the problem. In 2019, one study from environmentally conscious think tank The Shift Project, sparked a wave of headlines with its conclusion that streaming just 30 minutes of online video produced the same amount of emissions as driving a car almost four miles. But those figures were disputed, and the media’s reporting was largely inaccurate as a result.

There are a few reasons why things got muddled. For one, a slip of the tongue from a spokesperson during an interview meant some of The Shift Project’s figures were quoted in bytes instead of bits, which then meant the results were reported by the press as eight times higher than they actually were in the study (because a byte is eight bits, and a bit, if you didn’t know, is a unit of information represented by a binary digit – hence ‘bit’ – and can also be called a ‘shannon’, after Claude E. Shannon, the founder of information theory, but this is a tangent!). Many outlets focused on Netflix in particular, too, which isn’t accurate (the study deliberately focused on averages across the many players in the industry, as emissions vary pretty wildly depending on the provider in question – Netflix actually being towards the lower end). And then, even beyond that, there’s an ongoing debate about how some of the study’s numbers were pulled together (there’s disagreement about the “final 10 percent” discrepancy, thanks to the science of measuring the energy intensity of data transmission in particular evolving rapidly). Long story short: messy reporting aside, the science itself is still far from settled.

Crucially, though, that doesn’t mean it’s an impossible question to answer; just that the answer itself is changing all the time. Effectively, that answer comes in three key parts.

The climate impact of gaming’s internet usage depends on a) the efficiency of the infrastructure, such as data transmission networks and, more importantly, data centres, which determines how much energy they consume to do their jobs; b) the power sources of that infrastructure, which determines how much CO2 equivalent emissions are produced by consuming that energy; and c) the demand for the services themselves. Beyond that, we also need to take into account the local energy consumption – how much your console is drawing from the plug – and a few other factors, but much more on that later.

Data centres and efficiency: peering inside the “black box”

Keeping things general, let’s start with efficiency. The first thing to look at is how data centres are changing over time. Generally, in cloud computing especially, the data centre workloads are the main source of CO2e (carbon dioxide equivalent emissions). That means that if they’re getting dramatically more efficient, then that has a quite dramatic impact on the emissions themselves – and it turns out, broadly speaking, that they are.

According to the International Energy Agency, an intergovernmental organisation that aims to advise on the transition to clean energy, since 2015 in particular both internet traffic and data centre workloads have shot up, but efficiency gains in data centres (the ability to do more computing while using less energy, and therefore theoretically producing less CO2e) means that rise has been entirely offset.

In theory then, gaming using more of the internet doesn’t actually mean a rise in emissions. “Problem solved,” you might be thinking. If only. For one, the below graph shows averages, taking into account the efficiency improvements across the entire range of data centres, but gaming related ones could be right at the bottom of that scale – so more gaming internet usage could technically still mean more emissions.

More importantly still, when talking about emissions there’s a crucial difference between improvements in efficiency and improvements in absolute terms.

The above graph is a good example: data centre efficiency has improved so much that workloads are offset, keeping the green line flat. But in absolute terms, that flat green line shows data centres are using just as much energy now as they were in 2015, or 2010. At this point it’s worth remembering the overall goal: as the IPCC reports recommend, we need to be reducing the amount of CO2e we produce, in absolute terms, by 45 percent from 2010’s levels by 2030 – not keeping it constant.

Reducing CO2e production comes in two ways, then: reducing the amount of energy you use – which, according to the above chart, isn’t improving fast enough to manage next to our growing internet consumption – or, changing the source of power itself from fossil fuels such as coal and natural gas, to fully renewable sources like wind, solar, or hydropower. This is the second part of the answer and, again, is also where things get tricky.

Companies, emissions, and “the grid”

George Kamiya is a digital/energy analyst at the IEA, and the author of a number of reports on data centre and related technology’s energy consumption – including the IEA’s fact-checking response to the press’s coverage of that Shift Project study. Data centres are “like a black box,” he tells Eurogamer. Generally, companies are very reluctant to share specifics, and that means the question of what exactly is powering them is difficult to fully answer – and on top of that, there are complexities in how the power grid itself actually works, and those complexities matter, when it comes to questions of who’s powering themselves with what.

To oversimplify a fair bit, the key thing to think about is what the “grid” itself is made up of. In Great Britain, for instance, in August 2021 the national grid’s power was made up of 36 percent gas, 18 percent wind, 15 percent nuclear, 6 percent biomass, 2 percent coal, 6 percent solar, 15 percent imports, and 1 percent hydropower. All in all, 41 percent of the British national grid came from “zero carbon” sources, at a monthly peak of 73.89 percent – you can actually track this live on the National Grid’s app. This varies from country to country – on a per-capita basis, France’s carbon intensity is lower than Britain’s for instance, and Germany’s slightly higher – but, crucially, in some places like the US it also varies significantly from state to state.

There are also different definitions of “carbon neutral” and different methods for reaching it. The biggest companies can actually build their own energy assets – wind farms, say – and keep them very close to their data centres, more or less powering them directly. Apple is one company that’s been known to have done this. But they can also purchase things called Renewable Energy Certificates, or RECs, that essentially say the buyer “owns” one megawatt-hour of renewable electricity. The problem with RECs is twofold. First, generally they don’t really contribute much at all in terms of adding new renewable energy to the grid. In the US especially, thanks to some complexities in their supply and pricing, often RECs are so cheap that a large company buying them from a supplier doesn’t really contribute much to that supplier’s ability to expand their operations.

Second, because the buyer is still taking their actual electricity from the grid itself, which can be a mix of all kinds of energy, RECs effectively scrub over the initial “dirty” energy that’s used – a company might power its data centres from a grid that’s 90 percent non-renewable energy, for instance, releasing greenhouse gases into the environment, and then purchase RECs to match that amount after the fact, in order to claim it’s 100 percent renewable powered.

Then there are Power Purchase Agreements, or PPAs, which are effectively direct contracts between a consumer of energy, like a data centre operator, and a provider who generates that energy for them. These are, broadly, seen as better than RECs because they can involve an agreement to actually build a new source of renewable energy for the buyer to buy that energy from, or at least act as a promise that enables that supplier to secure investment to then match the promised demand. But they’re still not perfect.

As Kamiya put it to Eurogamer, “To me, this [method] poses several problems in that on an annual basis, [companies] can claim to say, ‘Our data centres are 100 percent renewable’, because if for example Google consumed 10 terawatt hours of electricity, they have enough of these power purchase agreements, with different electricity providers, on an annual basis, globally, to say, ‘Oh, we generated 10 terawatt hours of electricity, therefore, we’ve offset our electricity use.’ But in the real world, of course it matters where your data centre is operating, right? If you’re running in a coal-rich region, like Virginia, which Greenpeace has criticised Amazon and others for, sure, you could buy a wind contract in somewhere else like California, or wherever – in the Netherlands, or Denmark – but that doesn’t really offset the carbon that’s coming from the data centre that’s burning that coal.”

Bringing this back to gaming, there are some breadcrumbs of information to be found. Greenpeace – which, aptly, cites “lack of transparency” from companies as the number one barrier to a 100 percent renewably powered internet – offers the most in-depth breakdown in its 2017 Click Clean report. The catch of course is that being a 2017 report, based on 2016 data, much has already changed in the time since. But it’s a start. As of 2017 here’s how things looked.

Greenpeace broke this report down into sections for different service providers – video streaming like Netflix and YouTube, messaging like WhatsApp, social media like Facebook, and so on – but not for video games. To pick out some gaming-related figures from the above summary, then, let’s start with Amazon Web Services, the world’s largest cloud company that’s used both by Amazon’s new Luna platform and others in the gaming space, including Nintendo (and incidentally, for the sake of transparency, Eurogamer’s servers).

AWS scored poorly by Greenpeace’s standards. As of 2017, it used renewable energy for just 17 percent of its data centre operations and also ranked “F” for transparency, thanks to its poor record at the time for providing customers with any energy data on request, and a statement that “five regions are ‘carbon neutral'” but, in Greenpeace’s words, “no definition of what this means, or how it is able to deliver this claim.” Another key issue with Amazon was its rapid expansion in the US state of Virginia, the home of “Data Centre Alley”, thanks to heavy tax incentives for data centre operators, the nationally central location, and proximity to the US capital. As of 2017, Virginia was powered by 93 percent non-renewable energy (a fairly even three-way split of natural gas, coal, and nuclear), mostly provided by the near-monopolistic Dominion Energy.

Google, meanwhile, which runs game streaming service Stadia, ranked “A” overall, using renewable energy for 56 percent of its operations at the time, the main caveat being its lack of facility or region-level energy demand data (meaning it’s hard for customers who use Google’s cloud services to know whether their location is a green one or not).

Microsoft, which runs its own cloud services for Xbox, including Xbox Cloud Gaming, and has a partnership with Sony for usage of its Azure cloud services too, was cited as improving steadily, scoring B overall. Previously Microsoft had claimed that its data centres were already 100 percent renewably powered, but was in fact mostly relying on Renewable Energy Certificates for this rather than actual new renewable energy fed into the grid. The company then changed that to a more open approach that measures the amount of renewable energy directly powering its data centres. At the time, its new short-term target in 2016-2017 was to source 50 percent renewable power for its data centres by 2018.

Unfortunately, this 2017 report was the last of its kind from Greenpeace, but in the time since, many companies have improved their own transparency somewhat, likely thanks to the ongoing conversation around the impact of using the internet itself – for which Greenpeace, and others like The Shift Project, deserve credit.

In 2020, Microsoft, for instance, unveiled overall plans to be “carbon negative by 2030”, acknowledging again that its use of RECs isn’t enough. “This is an area where we’re far better served by humility than pride,” the company wrote. It’ll achieve this, in part, by shifting to “100 percent supply of renewable energy” for all of its data centres, buildings and campuses by 2025, using power purchase agreements to source that energy. In order to be carbon negative by 2030, the company has promised to remove more carbon than it emits by using NETs (negative emissions technologies), “potentially including afforestation and reforestation, soil carbon sequestration, bioenergy with carbon capture and storage (BECCs), and direct air capture (DAC).” The company will also publish annual environmental sustainability reports – here’s the first, 96-page report published in 2020.

Google claims to have already fully matched the energy consumption of its data centres and offices with renewable purchases since 2017 – those PPAs that Kamiya mentioned – and has since unveiled a new target of operating on “entirely carbon-free energy 24/7” by 2030. “We’re the first major company to commit to sourcing 24/7 carbon-free energy for our operations, and we aim to be the first to achieve it,” the company said. A key step will be the introduction of large-capacity batteries for storing backup power for data centres, as opposed to the current solution of diesel-powered generators that activate when grid power fails. The aim is for the first of these to be implemented in Belgium this year.

Kamiya noted that while companies like Google and Microsoft going fully renewable 24/7 isn’t “super important” in the “real world,” the potential knock-on impact of it could potentially be “much more transformational for other parts of electricity. So, if we could figure out and track electricity demand across all uses, and then figure out how to balance that demand so that, for example, when there’s a lot of sun, maybe all the AC units kick in to reduce the carbon emissions from electricity. That’s kind of the next step that some of these companies are on.”

Amazon now also releases annual sustainability reports too, the latest of which stating its goals of reaching “net-zero carbon by 2040” across the entire business, and powering its operations, including AWS data centres, with 100 percent renewable energy by 2025. It calculates its percentage of renewable energy by adding the amount of renewable energy in the grid where it uses that energy to the amount of renewable energy produced by its own “Amazon Renewable Energy Projects”, then dividing that by its own energy usage. It aims to achieve that 100 percent goal by “improving the energy efficiency of our operations and adding new renewable energy to the electric grids where we operate across the world. We will partner with other companies, utilities, policy makers, and regulators to accelerate plans and policies that increase the clean energy on the grids that serve Amazon and our customers.” This isn’t entirely clear, but seemingly it suggests the goal will be achieved through the PPAs that the likes of Google already use to reach 100 percent renewable sourcing.

Worth noting is that overall, while Amazon’s carbon intensity (the carbon to earnings ratio) decreased by 16 percent, its absolute carbon footprint as a whole increased notably in 2020, by 19 percent year-on-year, which it attributes to the business’ growth overall. “Amazon has some carbon emission numbers,” Kamiya said, “but nobody really knows how much electricity Amazon Web Services [specifically] is using. Greenpeace has been hammering them, constantly, on this data disclosure – and on where they decided to build data centres, like in Virginia when there was a lot of pressure a few years ago.”

Sony, Valve, and Nintendo don’t provide data centre services in the same way that the likes of Microsoft, Google, and Amazon do. Nonetheless, Sony does publish a corporate sustainability report with some firm data on its overall emissions and goals. In 2010, it set the goal of “achieving a zero environmental footprint throughout the lifecycle of its products and business activities by the year 2050”, and this has remained the same as of the latest 2021 report. Overall, according to the company, “in fiscal 2020, Sony’s site emissions of greenhouse gases totaled approximately 1.39 million tons, which was up by approximately 1 percent from fiscal 2019 but down approximately 11 percent from the fiscal 2015 level.”

An important caveat, again, is Sony is not the same kind of business as Microsoft or Google. The understanding is its data centres are mostly operated via external partners, including Microsoft’s Azure, although its goals for working with those partners, and the nature of those partnerships themselves, could be clearer.

As per the sustainability report, “Sony offers a wide variety of network services including gaming, Internet, and streaming services for movies and music. These services rely on data centers with facilities and components for transmitting large volumes of data to ensure seamless services for users. The amount of electricity consumed by data center equipment and facilities is increasing with the growth of network businesses. Sony’s environmental mid-term targets include the target of prioritizing the use of energy-efficient data centers. Sony has been working on this by developing guidelines in fiscal 2016 that have been put into effect since fiscal 2017.” Eurogamer was not able to find public details of those guidelines, nor data for its network services’ carbon footprint specifically.

Nintendo also publishes a sustainability report, although it does not cite specific numerical renewable energy targets for the whole business. It cites 100 percent renewable energy purchases for its US offices in Redmond and its suburban shipping facility, and publishes data on its overall CO2e production. Notably, the 2020 total is down 46 percent on 2019, although Nintendo does not publish what’s referred to as Scope 3 emissions data, which is almost always the largest figure, nor specific emissions data for network-related services.

Finally, Valve does not have a corporate environmental or sustainability report that Eurogamer could locate. According to one investigation from specialist site SamKnows, Steam uses a simultaneous combination of both multiple external partners and its own content delivery networks (CDNs) to provide the data centre and transmission services that allow you to download its games, including Level3, EdgeCast (Verizon), Stackpath, and Akamai. CDNs are not the same as data centres but for a brief idea, Akamai is one of the largest in the world, and rated B overall in the 2017 Greenpeace report, albeit with just 16 percent renewable energy usage at the time. According to its own sustainability reports the company has since met its 2020 target of sourcing 50 percent renewable energy by adding new renewables to the grid, but does not appear to have published any further hard numerical targets.

Eurogamer contacted each of Microsoft, Sony, Nintendo, Google, Amazon, and Valve for this report. At the time of writing, Microsoft has not responded to Eurogamer’s requests. Valve and Google were not able to provide anyone with the relevant information to speak to Eurogamer. Sony was not able to respond to Eurogamer’s requests in time for the publication of this piece. Amazon provided the following statement, which repeats the key points of its sustainability report, and also pointed us to that report for further information.

“In 2019, Amazon co-founded The Climate Pledge, a commitment to be net zero carbon across our business by 2040, and we are on a path to using 100 percent renewable energy by 2025, five years ahead of our original goal of 2030. For Luna, which runs on Amazon Web Services, we are continuously working to limit waste and reduce energy use in our data centers. In fact, a study by 451 Research [commissioned by Amazon] found that AWS’s infrastructure is 3.6 times more energy efficient than the median of surveyed enterprise data centers.”

Nintendo provided the below statement:

“To deliver our company goal of ‘Putting Smiles on the Faces of Everyone Nintendo Touches,’ we feel that one of our most important responsibilities is to protect the environment to provide a healthy planet for future generations.

“With respect to the downloadable software we offer, since it can be purchased by consumers at home or elsewhere and saved on their console or SD card, we believe that it contributes to reducing environmental impact from the manufacturing and transportation of our products and packaging materials. We do not disclose specific details about the reduction of that environmental impact.

[“source=eurogamer”]